The Extra Mile: Artificial intelligence, academic integrity, and AI literacy

Attribution? Important.

In this master post, you will find:

Background

In 2019, I was doing my press fellowship at the University of Cambridge, where my focus was on misinformation in the media. By extension, news coverage of artificial intelligence was also of importance to my study, as the use of AI tools could result in more sophisticated forms of misinformation.1

Among the media my programme director John Naughton made our cohort consume was the 2017 documentary AlphaGo (dir. Greg Kohs), about Google DeepMind’s titular computer program, which mastered the board game Go, chosen because it is considered the most difficult board game to master. The film documented AlphaGo as it challenged the legendary Lee Sedol in a five-game match, which more than 200 million people watched online.

Without giving too much away, the AlphaGo creators initially wanted the computer program to do well to show that their work was a success. But towards the end, even they seemed to be rooting for Lee, for humanity’s sake. “I think it’s clear why,” said Lee. “People felt helplessness and fear. It seemed we humans are so weak and fragile.” A victory, even in one of the five games, would mean we could still hold our own. After playing perhaps the most iconic move ever made in Go, tech reporter Cade Metz said, “[Lee’s] humanness was expanded after playing this inanimate creation.” If you haven’t watched the documentary, I urge you to, as a prior understanding of the board game is unnecessary. It is available for free on YouTube.

Another event Naughton arranged for the cohort was a talk by communication scholar and postdoctoral research fellow at the Reuters Institute for the Study of Journalism, J. Scott Brennan, who presented the state of public conversation of AI in the British news media. “Artificial intelligence is a term that is both widely used and loosely defined,” wrote Brennen and his co-authors in a factsheet published in 2018.2 This remains true to this day.

I wrote briefly about this back in 2020 in an issue that was partially written by GPT (which formed the foundation of ChatGPT)3 and again two years later, which demonstrated my point that not much has changed.4

So, let’s take a few steps back and start with some definitions. Then, as a case study, we’ll examine a recent report on artificial intelligence and academic integrity, where a Texas A&M University-Commerce instructor threatened to fail his class because of his own misunderstanding of how AI chatbots work as a case study. Finally, we’ll take a look at some practical approaches to integrating AI chatbots in classrooms without jeopardising the learning process (of the human students). Of course, there are many instances of ethical and technological panic over the developments in the AI sector, but nothing as lowkey ironic as its use in education.

Definitions

Artificial intelligence

Machine learning

Deep learning

Natural language processing

Large language modelling

Even though they shouldn’t be, artificial intelligence (AI) and machine learning (ML) are frequently used interchangeably. AI relates to computers’ general capacity to mimic human thinking and execute tasks in real-world settings. In contrast, ML is a subset of AI related to algorithms and statistical models that allow systems to recognise patterns, make decisions and enhance their performance based on experience and data sets without explicitly programming them to do so.

Additionally, deep learning is a specific type of ML inspired by the structure and function of the human brain’s neural networks. It uses artificial neural networks with many layers of interconnected nodes (known as “deep neural networks”) to learn and understand data in a detailed way (thus, deep learning). A practical example is the personalised recommendation systems on e-commerce, streaming, and social media platforms.

Deep learning is an important part of natural language processing (NLP), even though it is only one of the techniques used within NLP. NLP is an area within AI concerned with the interactions between computers and human language. It involves developing algorithms and methods to enable computers to understand, interpret, and generate human language.

NLP and large language modelling (LLM) are related but distinct concepts. While NLP encompasses various methods to understand and process human language, LLM is a specific approach within NLP. It involves training large-scale language models on massive amounts of text data (such as the entire Internet up to September 2021). The goal is to enable the models to predict patterns and linguistic structures based on the training materials to generate coherent and contextual text responses.

LLM powers virtual assistant technologies such as Amazon’s Alexa, Apple’s Siri and Google Assistant, as well as chatbots such as Bing and ChatGPT.

Case study

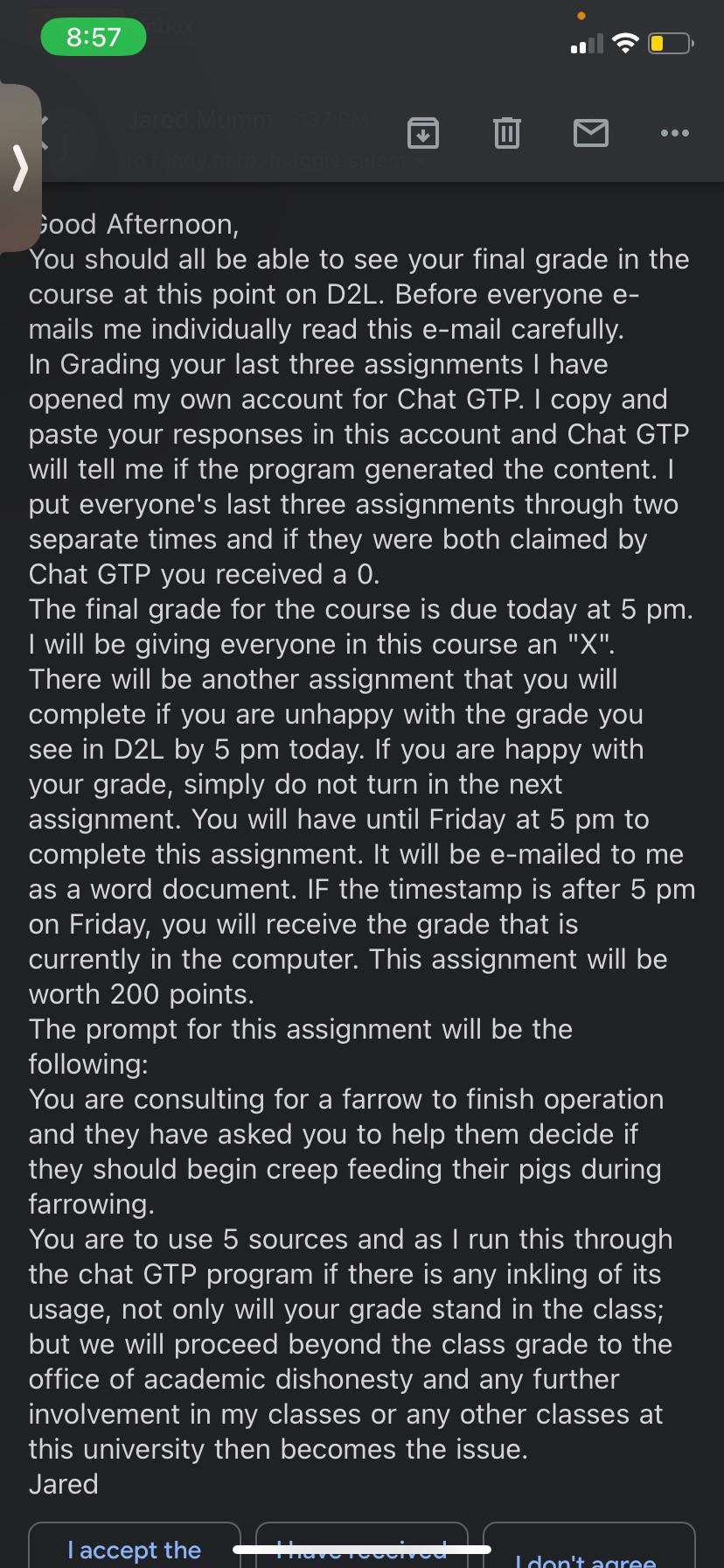

On 15 May, Redditor @DearKick posted the following screenshot to the subreddit r/ChatGPT (text version below the image):

Text version (unedited):

Good Afternoon,

You should all be able to see your final grade in the course at this point on D2L. Before everyone e-mails me individually read this e-mail carefully. In Grading your last three assignments I have opened my own account for Chat GTP. I copy and paste your responses in this account and Chat GTP will tell me if the program generated the content. I put everyone's last three assignments through two separate times and if they were both claimed by Chat GTP you received a 0.

The final grade for the course is due today at 5 pm. I will be giving everyone in this course an “X”. There will be another assignment that you will complete if you are unhappy with the grade you see in D2L by 5 pm today. If you are happy with your grade, simply do not turn in the next assignment. You will have until Friday at 5 pm to complete this assignment. It will be e-mailed to me as a word document. IF the timestamp is after 5 pm on Friday, you will receive the grade that is currently in the computer. This assignment will be worth 200 points.

The prompt for this assignment will be the following:

You are consulting for a farrow to finish operation and they have asked you to help them decide if they should begin creep feeding their pigs during farrowing.

You are to use 5 sources and as I run this through the chat GTP program if there is any inkling of its usage, not only will your grade stand in the class; but we will proceed beyond the class grade to the office of academic dishonesty and any further involvement in my classes or any other classes at this university then becomes the issue.

Jared

When I first saw this post four hours after it was up, it hadn’t blown up yet, but it was heating up. Considering Jared’s flawed understanding of how “Chat GTP” (sic) works, I already knew how vicious the news headlines and social media responses would be. And that’s really not helpful in improving AI literacy when the panic and paranoia surrounding AI are high.

But, first, let me tell you what transpired in the first few hours after this post was made—before the media picked it up. Like many subsequent replies to the sub, I, too, put Jared’s email through ChatGPT and asked if it wrote it, to which it said yes. In fact, staying true to Jared’s methodology, I did it two separate times. I also found his 2017 PhD dissertation, which, at that point in time, was the last item on the first page of a Google search result of his name. You can guess what I did with it—and you can guess what ChatGPT claimed.

This isn’t a problem of age or education. Jared is not an uneducated boomer, as some initially suspected. Based on his CV, Jared seems to be an older millennial, like me, with a PhD, unlike me. Yet, I’m quite certain there’s a huge difference in our AI literacy scores.

Redditor @noveler7 wrote:

As a professor myself (older than him, but not by much), I just can’t imagine blindly trusting some AI detection tool so early in this stage of this tech’s development. I had student papers this semester that had some sections that got flagged for AI even when I was there helping them with the writing and editing process.” The user continued, “A lot of professors are paranoid about plagiarism, and I get it, but at some point, you just have to triangulate all the different types of assessment, read and learn to recognise some hallmarks of AI writing, develop a good rapport with your students, and do your best to evaluate them honestly.

Redditor @Taniwha_NZ called out:

What is most damning is that after ‘detecting’ the first half-dozen or so, this guy never stopped to wonder if his method was flawed, instead preferring to assume that every single student was a rampant cheater. And even getting right to the end, he still couldn’t look at the entire class of cheaters and wonder if maybe there was something else happening.

Another Redditor, @smughippie wrote:

As a professor, I am amazed that my colleagues will spend a stupid amount of time trying to figure out if a response is AI (and in a manner that won’t tell you either way) rather than spend that same amount of time designing assignments that don’t play well with AI. I personally am planning to use AI to teach writing. The way I see it, my students will use it. I might as well teach them how to use it responsibly.

Redditor @Smooth-Definition205 suggested:

There needs to be an AI introductory class that is mandatory for teachers and everyone else to understand the biases of AI and how it functions. It is new and catching on very quickly but not everyone that uses it is going to be literate in AI development and progression of it.

Plus, @rednoise pointed out:

There’s lots of academic use cases for ChatGPT that don’t bleed into academic dishonesty.

We’ll get into that. But this case does bring about several questions. The university in question should certainly have a formal procedure to address allegations of academic misconduct. This couldn’t be it. This instructor evidently isn’t the expert, so why is he not following due process?

At the core of this case study is the question of academic integrity. Academic integrity means ethical, honest, and rigorous research and publishing. It means avoiding cheating and plagiarism. It embraces fairness, honesty, trust, respect, responsibility and courage. Most academic institutions have some form of moral code and ethical committee. Students typically have to take an ethics class, or these concepts are included in their syllabi. But academic dishonesty still plagues the education system.

Plagiarism is a tale as old as time. And despite detection services adopting various approaches to flag instances of plagiarism or copyright infringement, it is still not 100 per cent effective.5 Similarly, AI chatbot detectors such as GPTZero have returned false positives, including claims that the US Constitution and the Bible were written by AI. Plagiarism can be unintentional too. Educators know not to trust these tools blindly. They use it to inform their judgment of the originality of their students’ works and rely on common sense to arrive at a decision about it. They are trying to be teachers, not investigators.

But it’s also a question of AI literacy. With these AI tools now widely available for use, sometimes, we panic, we misunderstand it, and we make things blow up in a way they shouldn’t have. It’s a mistake, and I’d like to think that it’s unintentional. The “Turnitin culture” does that to you sometimes. Because it feels like it’s “Post-Truth Era 2.0,” and the all-knowing is unknowable, and that’s scary. In fact, educator and ethicist Sarah Elaine Eaton contemplated the future of plagiarism and academic integrity, and she called it the “post-plagiarism age” of artificial intelligence.6 So, let’s get into that now.

AI literacy

In April 2023, I attended a talk on artificial intelligence and academic integrity with speakers from Brock University. The three speakers, Rahul Kumar, Michael Mindzak, and Sunaina Sharma, touched on the technical, philosophical, and practical aspects of the subject. This section is largely lifted from “The 156th Block.”7

Before the talk, I speculated about what might be brought up:

First, we like to think we are superior to machines and that we can always tell when something is AI-generated.

Second, educators will find a way to work around students’ use of AI chatbots in the classroom.

And third, mainstream AI will change our language mastery and communication.

Let’s go through them, and I’ll explain how they were addressed during the talk.

We can’t always tell

No, we can’t. In a simplified and modified Turing Test, Kumar and colleagues have proven that.8 The speakers did a similar demonstration at the talk:

We were presented with three texts that responded to the same prompt. Only one was written by a human. We had to choose which one we thought it was and why. I chose one—at random. I never claimed I could tell machine writing and human writing apart. It was as good as a coin toss if a coin has three sides.

One person said Text A was longwinded, like herself; thus, it’s human-generated. Another person said he chose Text B because it was descriptive and not a definition, while someone else said that he chose Text C precisely because it was a definition, not a description. In other words, nobody knows what parameters to use to decide what’s human and what’s not—because they’re all valid.

We will find a way

If we can’t always tell, then what are we going to do about AI in academia? Ban it? Embrace it? I shared the chart below on “The 156th Block.” Based on the case study in the previous section, where would you plot Jared on the chart? In comparison, where would you plot yourself?

Students will use AI chatbots anyway, whether it is allowed or not. There are opportunities for the legitimate inclusion of AI chatbots in education,910 the same way the calculator found its place in the classrooms. In an example the speakers provided, a math teacher presented his class with the following problem:

A piece of wire 10 m long is cut into two pieces. One piece is bent into a square, and the other into a circle. How should the wire be cut so that the total area enclosed is a maximum? (I reproduced this from memory, so it might not be accurate, but that’s inconsequential.)

The assignment was to input this problem into ChatGPT, and based on its response, the students needed to explain why ChatGPT’s answer was accepted or not. The students showed that ChatGPT’s suggestion was not ideal and devised a much better solution.

We might communicate differently

“English is the most important programming language.” I’m sure you’ve read iterations of this saying on LinkedIn, Reddit or Twitter. You can get AI chatbots to write codes in any programming language you wish, even if you have no prior knowledge of it, as long as you know how to write the prompts.

Surely you have also heard about how prompt writer or prompt engineer jobs are the next big thing, boasting six-figure salaries. Yet writing a good prompt is not as easy as you think. Anyone can write, but not everyone will be a good author. Similarly, anyone can use AI chatbots; not everyone will be good prompters. Additionally, just like many popular search engines, most AI chatbots work best with English. That could widen the language gap in diverse classrooms like Canada’s, especially without the necessary support for AI literacy, on top of English literacy.

Plus, it’s not just a matter of language mastery; it’s a matter of understanding the logical operations of the service. For example, there are many ways to optimise your search results when using search engines. Yet, most people still do not use them effectively—or at all.

I wondered if the proliferation of AI chatbot usage would change communication styles since you must be precise with your prompts by specifying context, tone, audience, and so on. Will this neutralise the effects of using virtual assistants such as Siri and Alexa, which are reportedly causing kids to be terse, even rude?11

While it wasn’t addressed during the presentation, I brought it up during the Q&A session for the speakers’ input, who highlighted the following:

Prompt optimisers, such as Perfect Prompt, are AI-based services where you can input your raw prompts to generate higher-quality prompts to feed into your AI chatbot of choice. So you don’t necessarily need to have a good command of the language to maximise your AI chatbot use, because there is an AI service for that.

Most generative AI programs are text-to-text, text-to-image, and text-to-audio. However, the next big thing, according to the speakers, is probably speech-to-text models, which may be most beneficial for spec ed needs. Personally, I look forward to an image-to-image model. I am hoping that I will be able to draw squiggly lines and curves and the AI service will be able to produce a higher-quality version of my doodles. That way, I can be a fashion designer without knowing how to actually draw or describe with words what I have in mind since I don’t have the technical vocabulary.

In summary, we need to improve our AI literacy to preserve academic integrity. Coming from a family of educators and having married an educator who also comes from a family of educators, I know one thing about teachers: You can probably trick them once, maybe even twice, but sooner or later, they will catch on to what’s going on, and their students will learn their lessons one way or another.

Tina Carmillia. (2021). The Sidelines: Beyond Katie Jones. The Starting Block. https://tinacarmillia.substack.com/p/sidelines-munira-mustaffa

Brennen, J. (2018). An industry-led debate: how UK media cover artificial intelligence. Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/sites/default/files/2018-12/Brennen_UK_Media_Coverage_of_AI_FINAL.pdf

Tina Carmillia. (2020). The 19th Block: The guardian of the theatrical AI coverage. The Starting Block. https://tinacarmillia.substack.com/p/the-19th-block

Tina Carmillia. (2022). The 117th Block: Against poor reporting and ‘poor’ reporting. The Starting Block. https://tinacarmillia.substack.com/p/the-117th-block

Youmans, R. J. (2011) Does the adoption of plagiarism-detection software in higher education reduce plagiarism?, Studies in Higher Education, 36(7), 749-761. https://www.tandfonline.com/doi/abs/10.1080/03075079.2010.523457

Eaton, S. E. (2023). 6 Tenets of Postplagiarism: Writing in the Age of Artificial Intelligence. Learning, Teaching and Leadership. Retrieved from https://drsaraheaton.wordpress.com/2023/02/25/6-tenets-of-postplagiarism-writing-in-the-age-of-artificial-intelligence/

Tina Carmillia. (2022). The 156th Block: Academic integrity and AI. The Starting Block. https://tinacarmillia.substack.com/p/the-156th-block

Kumar, R., Mindzak, M., & Racz, R. (2022). Who Wrote This? The Use of Artificial Intelligence in the Academy. https://dr.library.brocku.ca/bitstream/handle/10464/16532/Conference%20Presentation-final.pdf?sequence=1&isAllowed=y

Cotton, D. R. E., Cotton, P. A., & Shipway, J. R. (2023). Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innovations in Education and Teaching International. https://www.tandfonline.com/doi/full/10.1080/14703297.2023.2190148

Moya, B., Eaton, S. E. ., Pethrick, H., Hayden, K. A., Brennan, R., Wiens, J., McDermott, B., & Lesage, J. (2023). Academic Integrity and Artificial Intelligence in Higher Education Contexts: A Rapid Scoping Review Protocol. Canadian Perspectives on Academic Integrity, 5(2), 59–75. https://journalhosting.ucalgary.ca/index.php/ai/article/view/75990

Hill, A. (2022). Voice assistants could ‘hinder children’s social and cognitive development’. The Guardian. https://www.theguardian.com/technology/2022/sep/28/voice-assistants-could-hinder-childrens-social-and-cognitive-development

See, when I read the block on this a couple weeks back I did think it deserved its' own standalone post. I'm glad you did it. Well done. I think this is your best one yet.

Great post to stumble upon. Hear are some questions, feel free to answer or not.

1) Is "prompt engineering" or "promp writing" as a profession just a fad? Or will it be the new "content creator"?

2) Compare to plagiarism checkers, do ai checkers have similar success rates? If not, will it reach similar reliability?

3) Where would you place yourself in the embrace/ban axis? I'll assume you are at least within the "Informed" quadrants, based on your blog.

4) Among your education social circle, what is the sense you get from them? Are they anxious, worried, not bothered, ect.

Finally, check out this post. In it, they showed the limitations of current llm models in non-english context. https://cdt.org/insights/lost-in-translation-large-language-models-in-non-english-content-analysis/