This week…

I updated the checklist for fact-checking master post with some open-source tools for fact-checking and verification. Next week, I will be adding another section on some sensible steps to improve online privacy.

And for our interview segment, I speak with Tan Su Lin, co-founder of Science Media Centre Malaysia about the tiny, fragmented science communication community in Malaysia and how her organisation is improving the language around science in the media. You can find the full transcript at the link below. But first, a selection of top stories on my radar, a few personal recommendations, and the chart of the week.

Reporting on scientific failures and holding the science community accountable

The Journalist’s Resource’s Denise-Marie Ordway speaks with the authors of a paper that showed that negative news coverage of science without adequate context can erode public trust:

Advancing knowledge takes lots of trial and error. Also, scientists continually check, critique and try to replicate one another’s work, which means they are bound to run across mistakes and other shortcomings on occasion. As scientists build knowledge, they correct those issues and make other changes in response to new information, including new research findings, theories and data.

[…]

The bottom line: Trust science — the scientific process and scientific consensus — over individual scientists.

Whatsapp fuels surge of fake news in India

Katharina Buchholz for Statista:

A scientific study carried out by doctors from Rochester, New York, and Pune, India, and published in the peer-reviewed Journal of Medical Internet Research … found that around 30% of Indians used WhatsApp for COVID-19 information, and just about as many fact-checked less than 50% of messages before forwarding them. 13% of respondents even said that they never fact-checked messages before forwarding on WhatsApp.

Surprised? No.

AI-generated fake reports fool experts

Researchers Priyanka Ranade, Anupam Joshi and Tim Finin for The Conversation, taken in part:

To illustrate how serious this is, we fine-tuned the GPT-2 transformer model on open online sources discussing cybersecurity vulnerabilities and attack information. We then seeded the model with the sentence or phrase of an actual cyberthreat intelligence sample and had it generate the rest of the threat description. We presented this generated description to cyberthreat hunters, who sift through lots of information about cybersecurity threats.

The cybersecurity misinformation examples we generated were able to fool cyberthreat hunters, who are knowledgeable about all kinds of cybersecurity attacks and vulnerabilities.

Unlike other stories I share, I did not fact-check this because I secretly (now, openly) hope that this article was, in fact, AI-generated. Maybe if you use the tools and techniques from the master post above, you can tell me if it’s real.

What I read, watch and listen to…

I’m reading Christopher Cheung’s opinion piece for Toronto Star about how journalism has become a poor reflection of the world.

I’m watching Khadija Mbowe’s video essay on algorithms and colourism, and being dark on Breadtube.

I’m listening to When AI Becomes Child's Play, a podcast episode from In Machines We Trust that explores the relationship AI has with kids.

I’m making nasi impit.

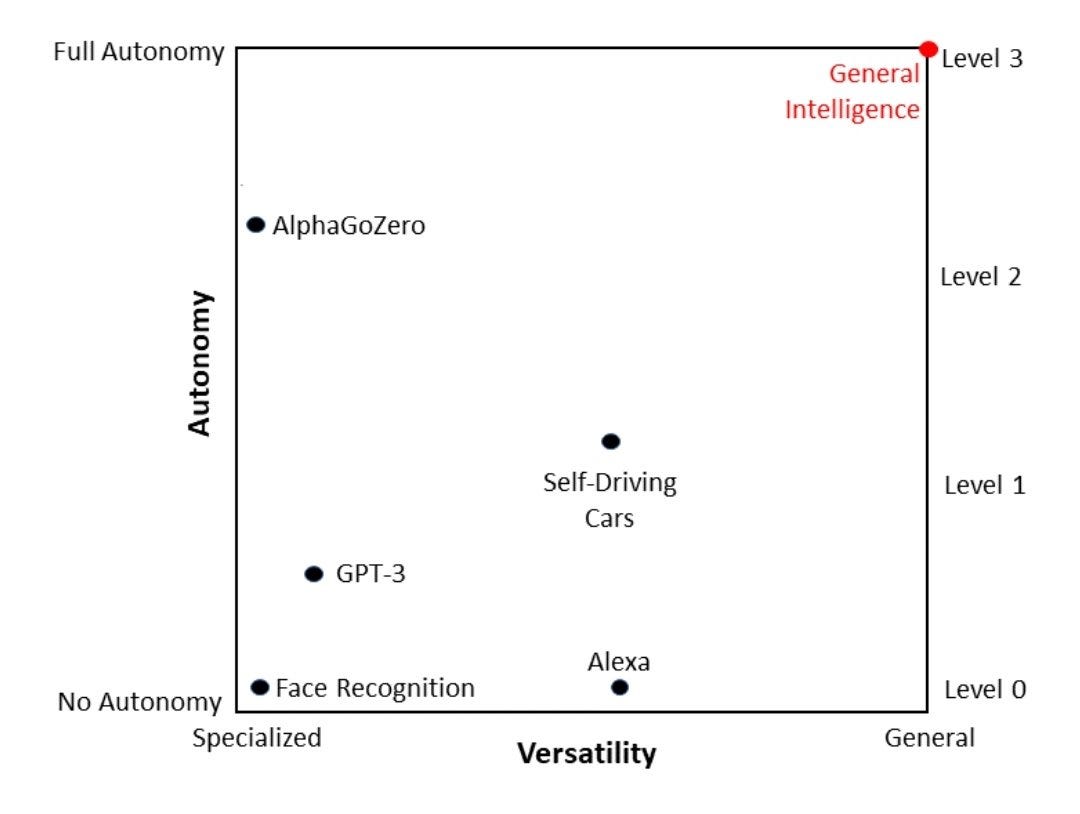

Chart of the week

The most beautiful figure on the Internet comes from the essay, Between Golem and God: The Future of AI by Ali Minai: