This week…

Your reading time is about 6 minutes. Let’s start.

Once upon a time, Canada thought Big Tech would back down when legislated to pay media companies for news stories on their platforms, just like they did in Australia. Yet, now, Meta is blocking news on its Facebook and Instagram platforms because of one factor that did not appear in the Canadian Online News Act: “designation.”

You see, the News Media Bargaining Code enabled the Australian government to designate any online platforms to pay for news. And even though Meta and Google were not designated at that point, the Big Bad Big Tech felt the looming threat of designation, so they began to make separate agreements with more than two dozen media companies in Australia. These commercial negotiations generated more than A$200 million a year last year for Australian news organisations.

But the important thing is Big Tech got to do that on their own terms, just between tech companies and media companies, because Big Tech don’t like government regulations intervening in their business and all that. And everybody got to pat themselves on the back! Not Canadians, though.

And now, a selection of top stories on my radar, a few personal recommendations, and the chart of the week.

ICYMI: The Previous Block showed how the Internet connects – and disconnects – the world. CORRECTION NOTICE: Updated with a missing hyperlink to the quote used in the second story.In news headlines, some civilian casualties are more valuable than others

Esther Brito Ruiz and Jeff Bachman for Nieman Lab:

As scholars who study genocide and other mass atrocities, as well as international security, we compared New York Times headlines that span approximately seven and a half years of the ongoing conflict in Yemen and the first nine months of the conflict in Ukraine.

We paid particular attention to headlines on civilian casualties, food security and provision of arms. We chose The New York Times because of its popularity and reputation as a credible and influential source on international news, with an extensive network of global reporters and over 130 Pulitzer Prizes.

Purposefully, our analysis focused solely on headlines. While the full stories may bring greater context to the reporting, headlines are particularly important for three reasons: They frame the story in a way that affects how it is read and remembered; reflect the publication’s ideological stance on an issue; and, for many news consumers, are the only part of the story that is read at all.

Our research shows extensive biases in both the scale and tone of coverage. These biases lead to reporting that highlights or downplays human suffering in the two conflicts in a way that seemingly coincides with U.S. foreign policy objectives.

We know.

Swedish daily Aftonbladet finds people spend longer on articles with AI-generated summaries

Aisha Majid for Press Gazette:

Aftonbladet began experimenting with ChatGPT in its newsroom at the start of the year with the goal of creating a tool to help it test out what generative AI could do.

The result was an AI-generated article summary that readers can choose to open within news articles. The initial results, deputy editor Martin Schori told Press Gazette, have been positive.

Unexpectedly, audiences spend longer reading articles that have summaries than those without. According to Schori, the findings surprised the newsroom, which initially thought the findings were a mistake.

Instead, Schori explained that since readers get a more general understanding of an article upfront, they are more likely to go on and read the whole text.

One good example of AI use in the newsroom.

ChatGPT broke the Turing test — the race is on for new ways to assess AI

Celeste Biever for Nature:

The world’s best artificial intelligence (AI) systems can pass tough exams, write convincingly human essays and chat so fluently that many find their output indistinguishable from people’s. What can’t they do? Solve simple visual logic puzzles.

In a test consisting of a series of brightly coloured blocks arranged on a screen, most people can spot the connecting patterns. But GPT-4, the most advanced version of the AI system behind the chatbot ChatGPT and the search engine Bing, gets barely one-third of the puzzles right in one category of patterns and as little as 3% correct in another, according to a report by researchers this May1.

The team behind the logic puzzles aims to provide a better benchmark for testing the capabilities of AI systems — and to help address a conundrum about large language models (LLMs) such as GPT-4. Tested in one way, they breeze through what once were considered landmark feats of machine intelligence. Tested another way, they seem less impressive, exhibiting glaring blind spots and an inability to reason about abstract concepts.

“People in the field of AI are struggling with how to assess these systems,” says Melanie Mitchell, a computer scientist at the Santa Fe Institute in New Mexico whose team created the logic puzzles (see ‘An abstract-thinking test that defeats machines’).

So, the hallmark of human intelligence is… being able to make abstractions and apply that to new problems? I need to know if the 95 participants in the study are representative of the population because I have *questions.*

What I read, listen, and watch…

I’m reading “Automating Democracy: Generative AI, Journalism, and the Future of Democracy,” a report by Amy Ross Arguedas and Felix M. Simon from the Oxford Internet Institute.

I’m listening to an episode of Ologies on neurotechnology with Nita Farahany and host Alie Ward.

I’m also watching Farahany’s talk on our right to mental privacy in the age of brain-sensing tech.

Other curious links:

“The hidden cost of the AI boom: social and environmental exploitation” by Ascelin Gordon, Afshin Jafari, and Carl Higgs for The Conversation.

“Facebook to unmask anonymous Dutch user accused of repeated defamatory posts” by Ashley Belanger for Ars Technica.

“Top athletes eligible to immigrate to Canada under self-employed category” by Beatrice Nkundwa for New Canadian Media.

“Jakarta’s roadside food sellers” by Arran Ridley, Nabilah Said, and Sarah Shamim for Kontinentalist.

“Los ‘deepfakes’ de voz engañan incluso cuando se prepara a la gente para detectarlos” por Emanoelle Santos en El País.

« Le Canada prend des risques en avançant seul dans la taxation des géants du numérique » par Brian Myles dans Le Devoir.

Chart of the week

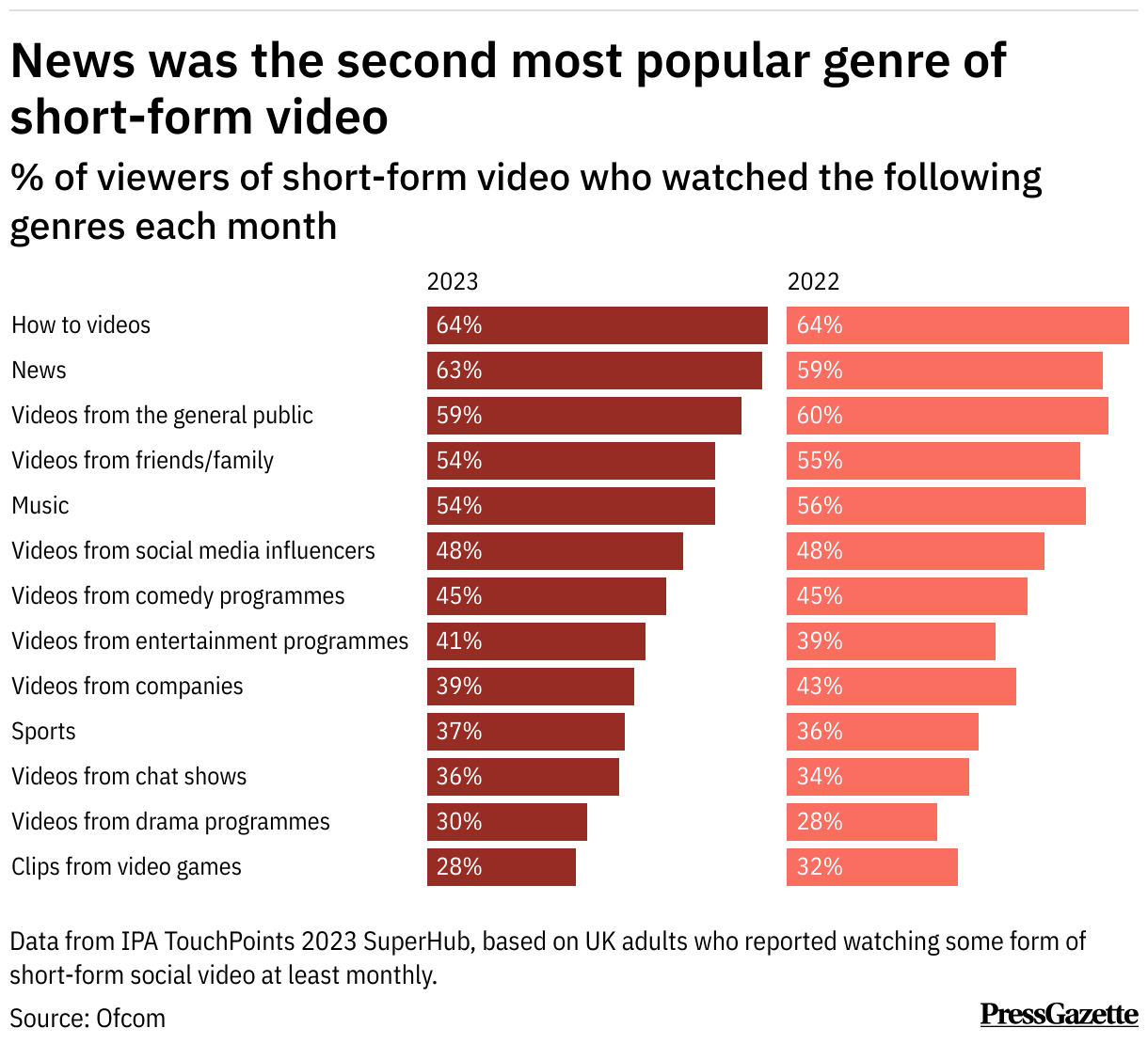

I don’t think this has happened before but Press Gazette’s Aisha Majid features twice in this edition. This time, she visualises a new Ofcom report that shows that news is the second most popular genre of short-form video in the UK (63 per cent) compared to how-to videos (64 per cent), up from 59 per cent last year.

And one more thing

Robin Kwong, the new formats editor at WSJ, releases a guide to newsroom project management. As someone with experience in project management and editorial-driven projects, I think more newsroom personnel should be reading this, whether or not they manage projects in their media organisations.