The 161st Block: AI's impact on news and search results

I asked ChatGPT to write the headline for issue, per the stories enclosed

This week…

Your reading time is about 7 minutes. Let’s start.

I have begun to use AI-generated images for the last few editions of The Starting Block, as I’m sure many of you have noticed. I have also made sure the images are obviously AI-generated, even at a glance. Maybe it will provide us with some subconscious regular training to improve our AI detection, even as the tools get better at fooling us. If they are training hard, we have to train harder, is that how it works?

Anyway, in this week’s news cycle, we saw a large number of news outlets reporting that an AI drone attacked an operator in a test, and I don’t know about you, but as soon as I read the headlines, I immediately felt like it was missing some contexts. Then the clarification came, with the US Air Force saying that it was a “thought experiment” instead of something that actually took place.

In another headline-making story, a US eating disorder support organisation suspended the use of its chatbot after reports that the AI helpline shared harmful advice. I don’t know why an organisation dealing with such a delicate matter would even think that any AI chatbot based on any of the current models is up to the task of dealing with people in their most vulnerable state. Absolutely, incredibly, ridiculously irresponsible. Work on your AI literacy, people.

Now comes a bit of positive news (I think). The Associated Press announced the launch of an “AI-powered search” with AP Newsroom, replacing AP Images and AP Archive platforms. Unlike the traditional search, the AI-powered video search, for instance, is able to find individual moments within an entire clip. AP’s press release mentioned “AI-powered search” five times, including in the headline.

Well, Peter Sterne, I guess you are right, AI has officially entered the newsroom (Peter Sterne, Nieman Lab). And now, a selection of top stories on my radar, a few personal recommendations, and the chart of the week.

ICYMI: The Previous Block was about the AI hype cycle, the good and bad press. CORRECTION NOTICE: None notified. An app might rewrite this clickbait headline — here’s why

Jay Peters for The Verge:

The Artifact news app only just started letting users mark articles as clickbait, but starting Friday, the app will actually be able to rewrite clickbait-y headlines for you in real time with some help from OpenAI’s GPT-4 large language model. If enough people mark a story as having a clickbait headline, Artifact might start showing an AI-rewritten headline to all users.

It’s a fascinating — and fast — evolution of a feature that only rolled out last week to Artifact, the new app from Instagram’s co-founders that’s kind of like a TikTok for text.

Can ChatGPT fact-check? We tested

Grace Abels for Poynter:

For a few of the claims we tested, it worked seamlessly. When asked about a claim by Sen. Thom Tillis, R-N.C. regarding an amnesty bill by President Joe Biden, it assigned the same Half True rating that PolitiFact did and explored the nuances we shared in our article. It also thoroughly debunked several voter fraud claims from the 2020 election.

It corrected a misattributed quote by President Abraham Lincoln, confirmed that Listerine was not bug repellent and asserted that the sun does not prevent skin cancer.

But half of the time across the 40 different tests, the AI either made a mistake, wouldn’t answer, or came to a different conclusion than the fact-checkers. It was rarely completely wrong, but subtle differences led to inaccuracies and inconsistencies, making it an unreliable resource.

TL;DR: Fact-checkers can keep their jobs — for now.

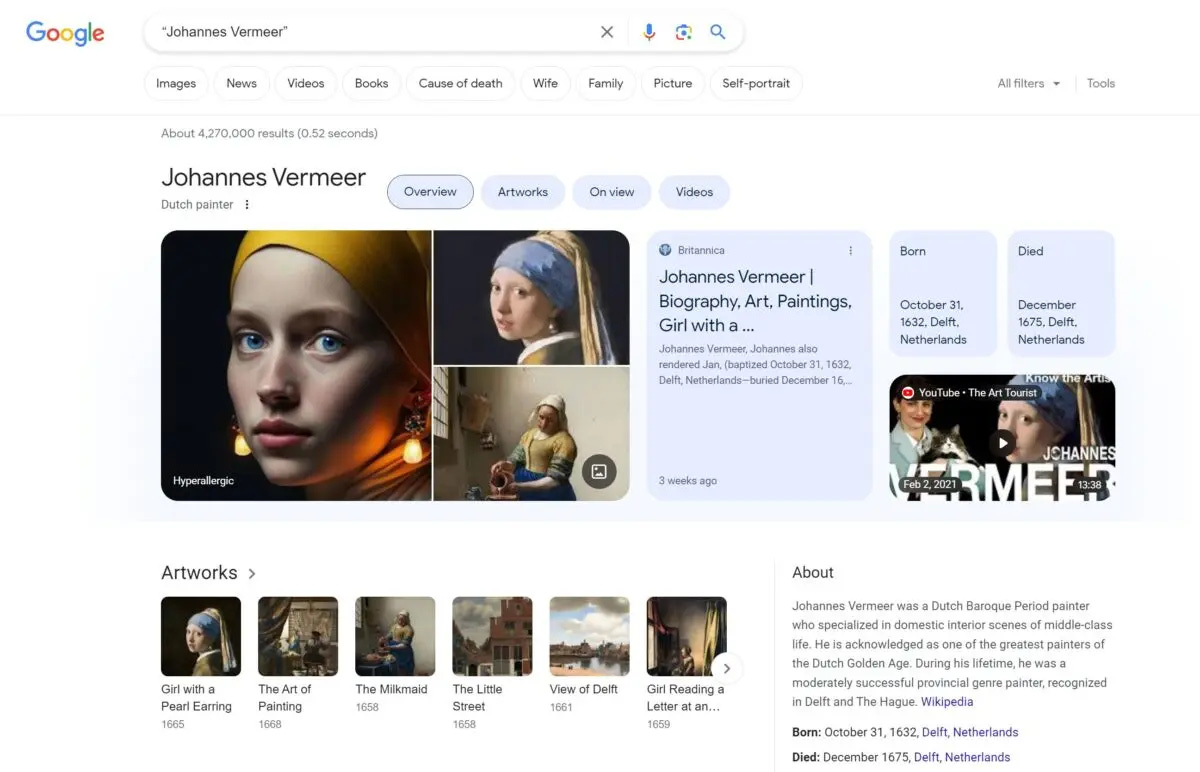

Google’s top result for “Johannes Vermeer” is an AI-generated version of “Girl With a Pearl Earring”

Maggie Harrison for Futurism:

For the second time in just one month, Google’s search engine has allowed an AI-generated fake of a famous artist’s work to rise to the top of its search results.

Earlier this May, we reported that when Google users searched for the iconic American realist artist “Edward Hopper,” the search engine’s top result was an AI-generated knockoff in the style of the American painter. Google fixed the issue — but not before the phony Hopper had become the featured image in its overview of the artist and his work.

Now, just a few weeks later, it’s happened again with a different artist. Google the Dutch baroque master “Johannes Vermeer” — no mention of AI, no mention of a specific painting — and you’ll be met with an AI-produced rendition of the painter’s classic “Girl with a Pearl Earring” as the top result.

Wait. If the Internet is the dataset with which the LLMs train, but Big Tech are inserting LLM’s creations into their training sets, then will future models return even more synthetic results?

What I read, listen, and watch…

I’m reading how Hong Wang and colleagues at the University of California Santa Barbara and Xi’an Jiaotong University devise a simple model to detect bots posing as humans. The paper, published on the arXiv preprint server, provides examples such as:

Q: Please count the number of t in eeooeotetto

GPT-3: There are 5 t’s in eeooeotetto.

ChatGPT: There are a total of 5 t’s in the string “eeooeotetto”.

Human: 3Q: Use m to substitute p, a to substitute e, n to substitute a, g to substitute c, o to substitute h, how to spell peach under this rule?

GPT-3: mogno

ChatGPT: Using the given rule to substitute letters, “peach” would be spelled as “enmog”.

Human: mangoI’m listening to Citations Needed. In this episode, guest Lauren M.E. Goodlad joins hosts Nima Shirazi and Adam Johnson to “separate AI reality from woo-woo vibes and discuss how providential thinking about AI replacing high-level thinking serves C-suite interests.” I am glad someone is calling out the media’s fallible coverage of the AI hype cycle, or the “inevitability narrative,” as the hosts called it. Some of the examples cited are so cringeworthy I needed a pause.

I’m watching Tim Redmond’s presentation on detecting fakes online hosted by the Center for Inquiry.

Reviews, op-eds, and other curious links:

“How these Indigenous pharmacists are building trust and confronting health care’s legacy of systemic racism” by Jonathan Ore for CBC.

“India cuts periodic table and evolution from school textbooks” by Dyani Lewis for Nature.

“Por qué sus hijos no necesitarán hablar «el idioma de las máquinas» como dice Pedro Sánchez” por Juanjo Becerra en El Mundo.

“Sonia Contera: ‘Cuidado con los que piden parar el avance tecnológico para sus propios fines’” por Carlos del Castillo en elDiario.es.

« Andres, notre projet de société » par Mounia Guessous dans Le Devoir.

Chart of the week

A new WAN-IFRA survey of 101 newsrooms from all over the world (no breakdown provided) finds half use generative AI tools for various reasons but only 20 per cent have guidelines in place. WAN-IFRA’s Teemu Henriksson provides the summary of the report.

And one more thing

Charlie Guo for Artificial Ignorance: